What I Do

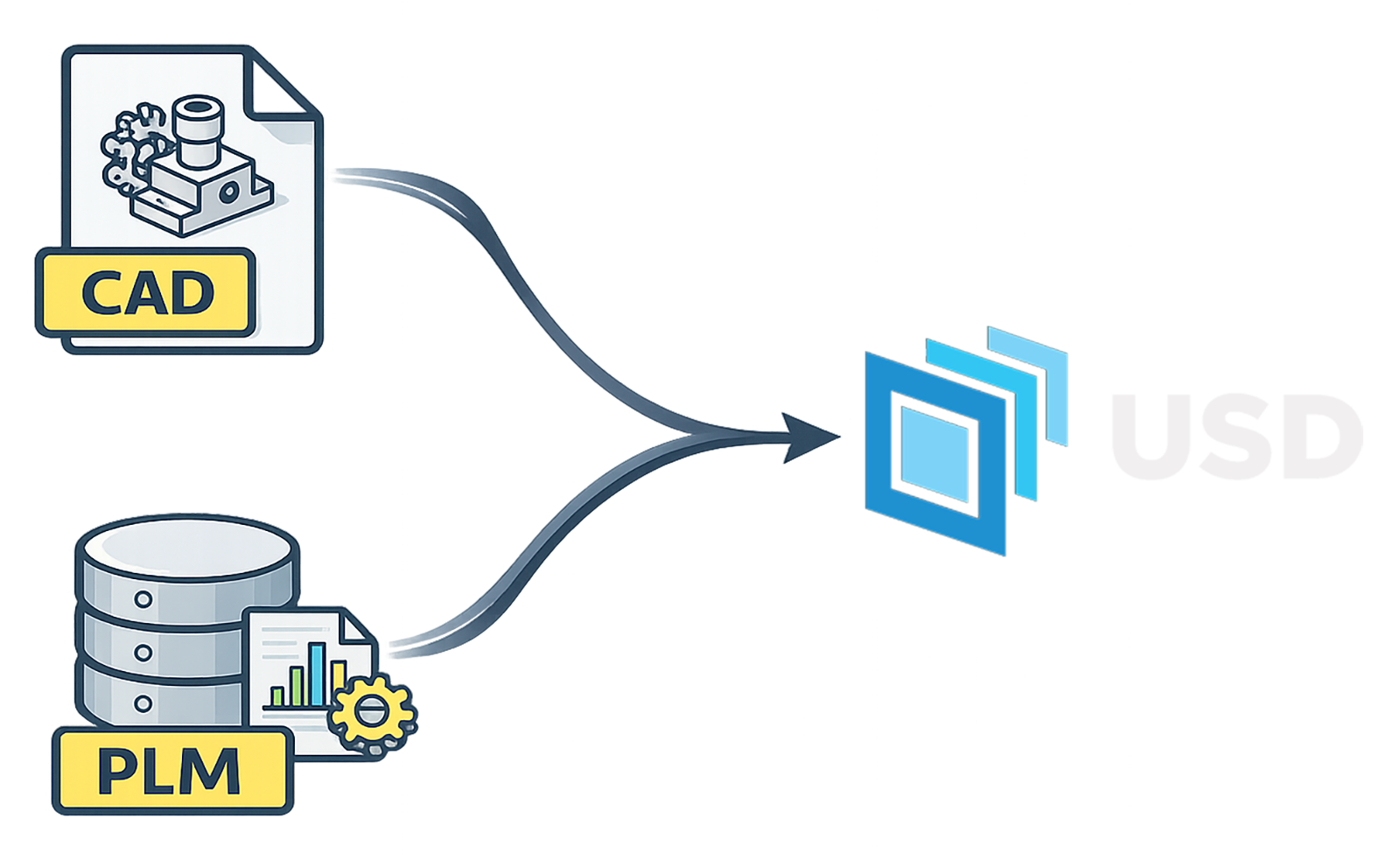

I design and own production systems that turn raw engineering data into reliable, reusable 3D assets for visualization, automation, and interactive applications.

This work goes beyond importing CAD. I build pipelines that stay stable under scale, constant revisions, multiple downstream consumers, and real production pressure. I am NVIDIA-Certified in OpenUSD, and I treat USD as the backbone of these systems rather than just an export format.

Core Focus

- Enterprise CAD ingestion and assembly handling

- USD authoring, structure, variants, and metadata strategy

- Houdini procedural processing and PDG orchestration

- Python automation across CAD, DCCs, and data services

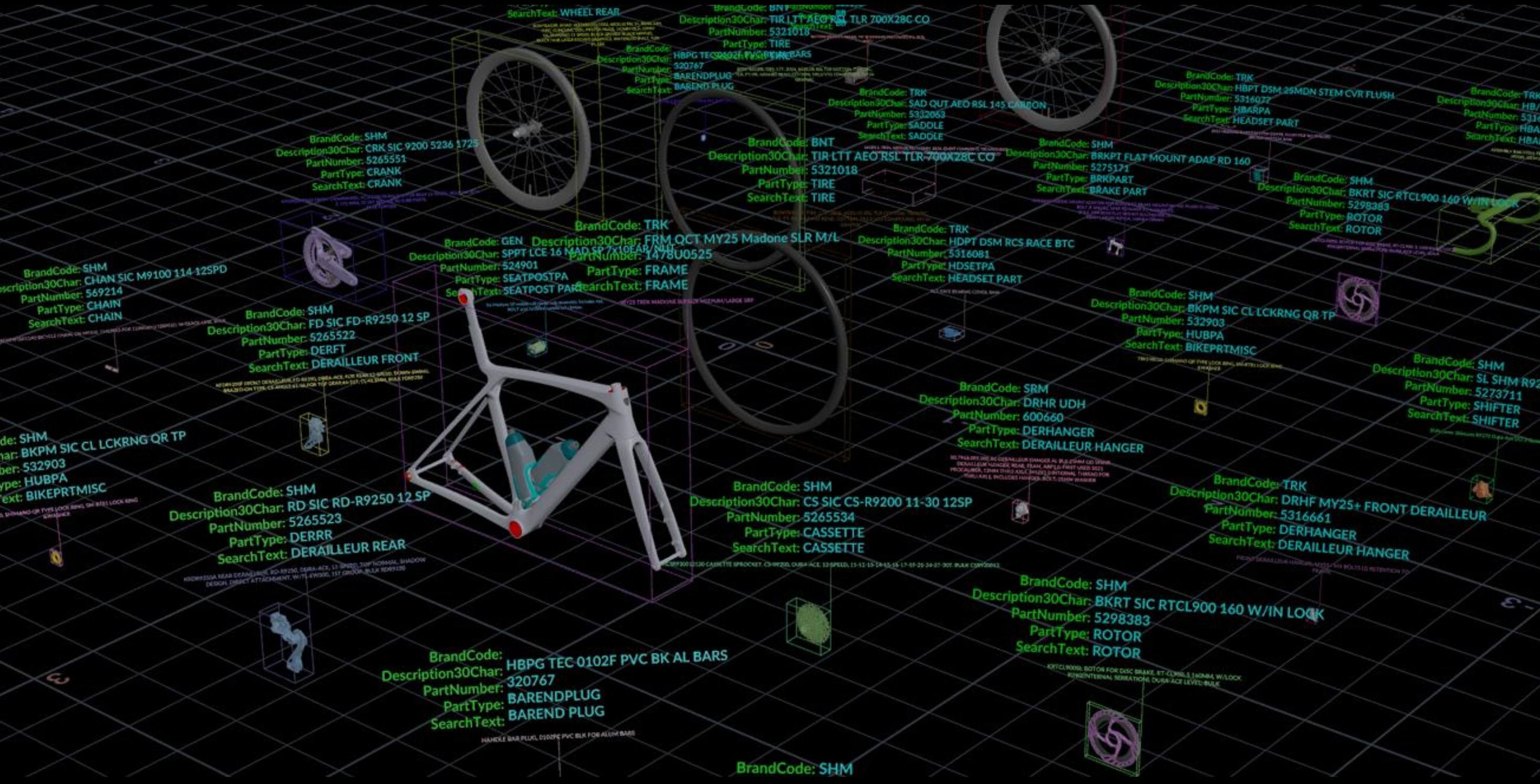

- PLM and engineering metadata integration

Deeper technical detail lives in Projects. The big picture lives in Pipeline.

USD Strategy

I base my pipeline work around USD because it allows systems to scale without locking the organization to a single DCC or a single rendering stack. USD becomes a stable contract between engineering data, processing tools, and downstream consumers.

In practice, this means treating assets as structured, layered data: assemblies that can be composed, reconfigured, and regenerated deterministically. Variants and metadata capture product intent. Referencing and payload patterns keep scenes lightweight while still supporting enterprise-scale builds.

- Interchange contract: USD is the handoff format between teams and tools, not a final “baked” deliverable.

- Determinism: builds can be reproduced from source inputs, with consistent structure and naming.

- Scalability: composition patterns keep large assemblies manageable for multiple consumers.

- Longevity: USD preserves value over time even as DCCs and renderers change.

I expand on the this in the Procedural USD Assembly Authoring on the Projects page.

Houdini and Procedural Pipelines

I use Houdini as a procedural engine for data processing and USD authoring, not just content creation. Proceduralism is a practical advantage in pipeline work because it expresses transformations, validation, and derived data as repeatable rules rather than manual edits.

Combined with PDG, Houdini becomes an execution fabric for large-scale builds: dependency graphs, caching, retries, and batch processing make it feasible to run enterprise workflows reliably. I keep pipeline logic in Python modules where possible, and use Houdini to orchestrate and apply procedural steps where it provides the most leverage.

- Proceduralism: consistent results from rules, not fragile hand-tuning.

- Scale: batch-oriented processing for large assemblies and frequent revisions.

- Operational reliability: validation hooks, failure handling, and deterministic regeneration.

Implementation examples live in Projects.

Success & Metrics

Managing the backend pipeline empowers the team to create beautiful work with high degrees of accuracy. The pipeline delivers a growing set of data and assets that the team relies on every day.

- 700+ USD-authored parts across 3,500+ versions

- 110+ USD bike builds across 624+ versions

- 700+ downstream art files

- 530+ product reference files

This foundation enables the team to produce the imagery shown below.