A production ingestion system that joins source CAD and enterprise PLM into deterministic USD assemblies.

Overview

This system is built around two authoritative inputs: CAD geometry from the source modeler and product definition from the enterprise PLM system.

CAD is extracted directly from the host application (for example, Onshape or SolidWorks) to preserve intent and maximize control. Pulling data at the source lets me set export specifications, capture configuration context, and retrieve metadata that is not reliably represented in neutral exchange formats alone.

PLM data varies by company, but it consistently carries the business logic that turns geometry into a real product: identity, lifecycle state, effectivity, options, and relationships. Joining PLM with CAD enables true product definitions to be captured and cataloged, not just geometric snapshots.

CAD Extraction Layer

CAD is king, and it deserves first-class treatment. The highest-leverage approach is a direct interface to the CAD system, not a one-off export. That interface lets you extract geometry plus the context that makes it usable at scale: assembly structure, occurrence transforms, configuration state, and CAD-native metadata.

- Source-integrated, not export-driven: CAD is accessed via the host API so extraction is deterministic and repeatable.

- Assembly graph capture: The full assembly hierarchy is pulled so downstream USD structure is authored from truth, not reconstructed.

- Occurrence-level transforms: Per-occurrence transforms and instance intent are extracted to preserve accurate placement and instancing.

- Configuration context: The exact CAD state (version plus configuration choices) is recorded so builds can be reproduced.

- Controlled extraction specifications: Preferred geometry is extracted as Parasolid with defined settings, with STEP as a fallback to keep outputs consistent and reproducible.

- CAD-native metadata: Properties that do not survive neutral formats cleanly are captured and carried forward where they add value.

- Multi-host pattern: The same extraction contract applies across CAD hosts, even though the APIs differ.

In the current production system, this layer interfaces with Onshape via a REST API using Python. I have achieved the same results against SolidWorks using its C# API. The pattern is portable and applies to any CAD host that exposes an API capable of providing structure, occurrence transforms, and controlled exports.

PLM Integration and Product Definition

PLM is the product backbone. CAD tells you what the geometry looks like. PLM tells you what it is to the business: identity, lifecycle state, effectivity, and relationships. When PLM data is joined with CAD, the pipeline can author USD that represents a true product definition rather than a standalone geometric snapshot.

- Stable identity: PLM provides durable identifiers (part numbers, revisions) that survive CAD restructuring and support deterministic naming and traceability.

- Lifecycle and effectivity: Release state and effectivity logic define what is valid, when it is valid, and for whom, which is critical for building the "right" product view.

- Relationship graph: BOM structure and parent-child relationships provide the business view of assemblies, substitutes, alternates, and serviceable components.

- Downstream leverage: PLM metadata becomes a driver for automation: filtering, publish gating, search, categorization, and hooks for material or process assignment.

- Configuration runway: PLM is the natural home for option logic and rules, enabling variant-driven builds as the system evolves.

- Vendor-neutral contract: PLM implementations vary company-to-company, but the integration pattern stays consistent: ingest authoritative product definition and map it onto CAD-derived structure.

In the current production pipeline, PLM data is ingested from ENOVIA through a SQL Server-backed interface implemented in Python. While the transport and schema differ across organizations, the role of PLM does not: it provides the business logic that turns CAD geometry into a complete, auditable USD product definition.

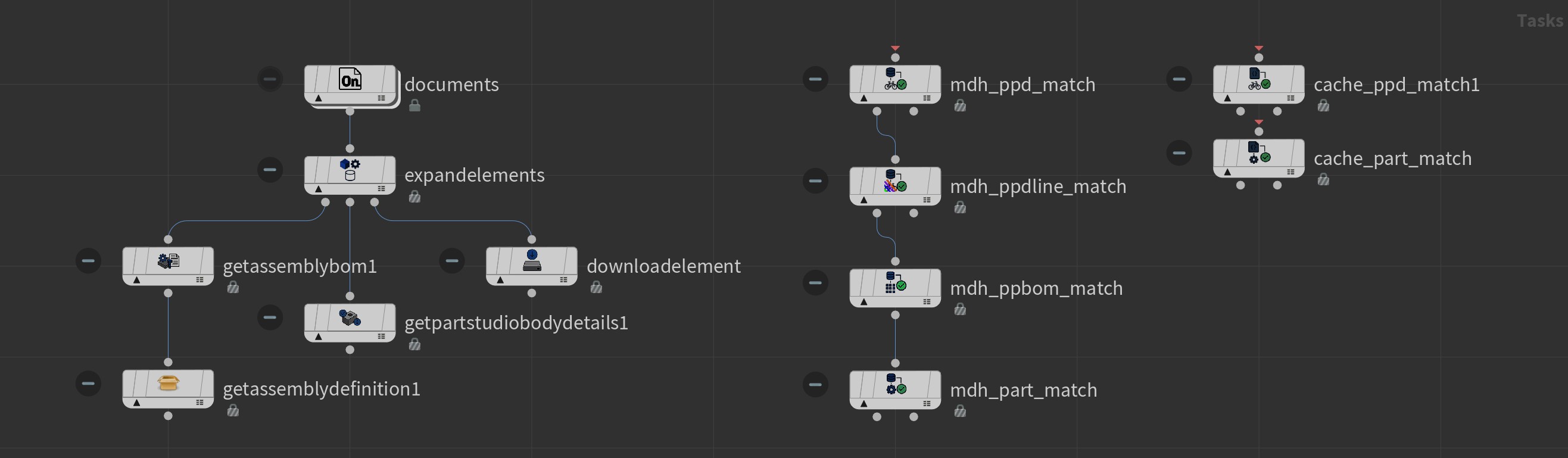

Orchestration and Systems Integration (PDG)

PDG is the integration surface. The CAD and PLM interfaces are implemented as discrete Python modules, and PDG is where those interfaces are composed into a single build graph that produces the final USD output. I develop PDG HDAs in Houdini that wrap and sequence these modules, turning independent capabilities into a repeatable system.

- Graph-based orchestration: Build steps are expressed as dependencies, making the pipeline easier to reason about, extend, and debug.

- Modular interfaces: CAD extraction, PLM ingestion, meshing, and USD authoring remain separate units with clear inputs and outputs.

- Operational leverage: PDG provides scheduling, batching, caching, retries, and farm scaling without rebuilding a custom orchestration layer.

- Determinism and regeneration: Given the same upstream inputs, the build can be reproduced, validated, and regenerated on demand.

- Production hardening: Failure handling, logging, and partial progress are treated as requirements for running under constant revision.

Design principle: Houdini PDG is the execution fabric, not the identity of the system. Core pipeline logic lives in standalone Python packages, while PDG HDAs define how those capabilities are composed into reliable, production-scale builds.

Ownership and Operations

This is a production system I own end-to-end. I designed and implemented the architecture, built the core interfaces, and operate the pipeline under constant revision pressure. Ownership includes the integration boundaries, data contracts, and reliability needed to regenerate outputs deterministically as upstream data changes.

- Architecture ownership: Defines the ingestion contract from CAD and PLM through processing and into authored USD assemblies.

- Integration ownership: Maintains the interfaces to CAD systems, PLM systems, and supporting services required to produce publishable outputs.

- Operational reliability: Logging, failure modes, retries, and recoverability are treated as first-class requirements, not afterthoughts.

- Deterministic regeneration: Builds are structured to be repeatable so outputs can be reproduced, validated, and refreshed as revisions occur.

- Downstream readiness: Outputs are authored with stability in mind for multiple consumers, including visualization, rendering, and real-time workflows.

The result is not a one-time conversion tool. It is an ingestion and publishing system designed to stay stable as source data evolves, while remaining extensible for future configuration and digital twin requirements.